Fine grained visual understanding

from patch-level to pixel-level details

What is spatial intelligence? It may be defined as “the ability to generate, retain, retrieve, and transform well-structured visual images” (D. F. Lohman, Spatial ability and g, 1996) or, more broadly, “human’s computational capacity that provides the ability or mental skill to solve spatial problems of navigation, visualization of objects from different angles and space, faces or scenes recognition, or to notice fine details” (H. Gardner).

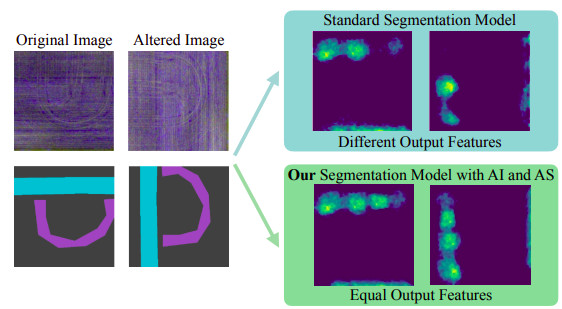

Understanding the fine grained details in images (or other forms of sensing) is a form of spatial reasoning that is useful for many applications. It is a spatial reasoning because the informative content of a fraction of the image (from a patch to a single pixel) depend on the context given by its surrounding. Me and my team have been working on the development of fine grained visual understanding algorithms for various use-cases, e.g., for driving scenes, aerial imagery and industrial applications.

Driving scenes

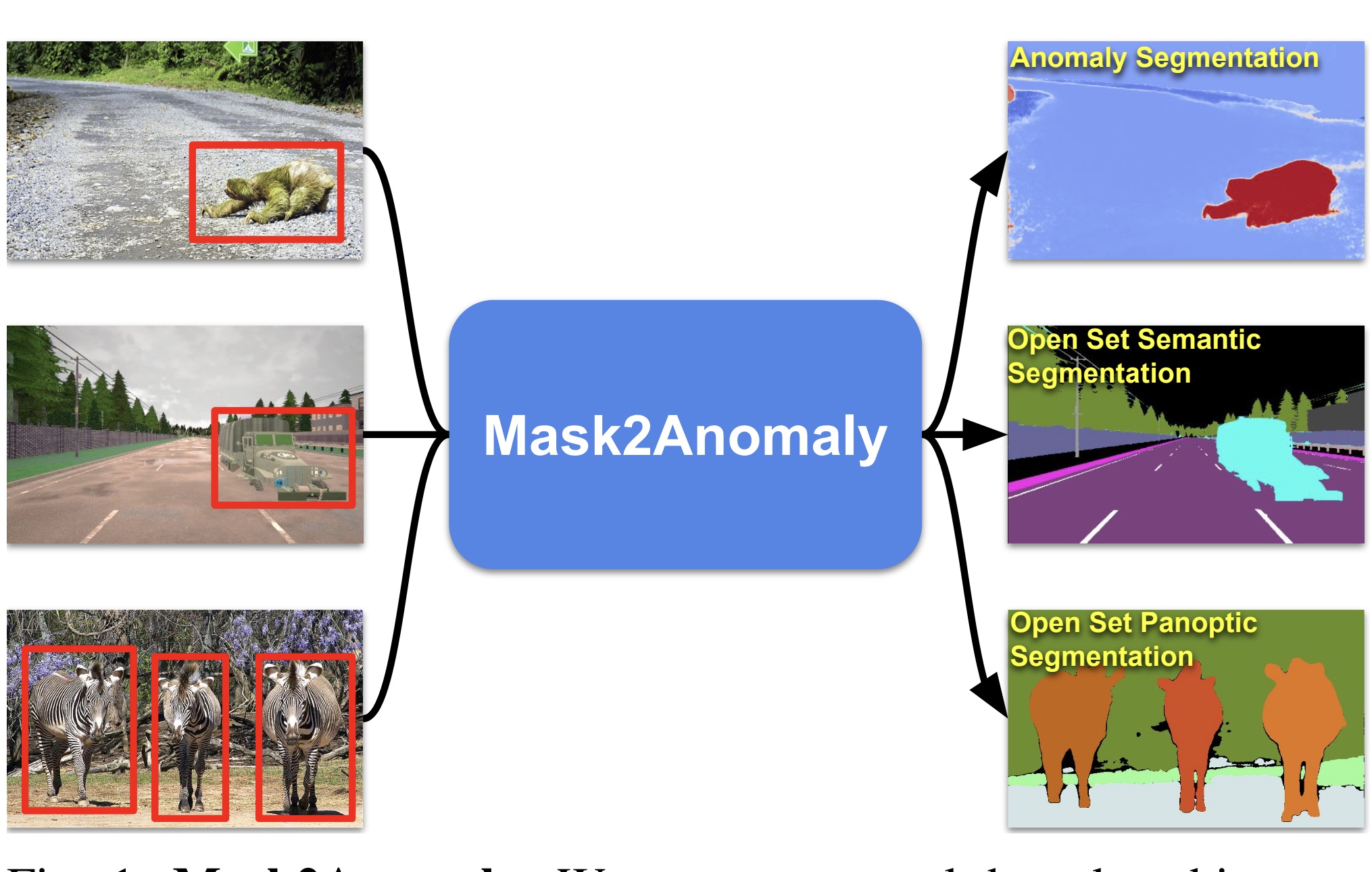

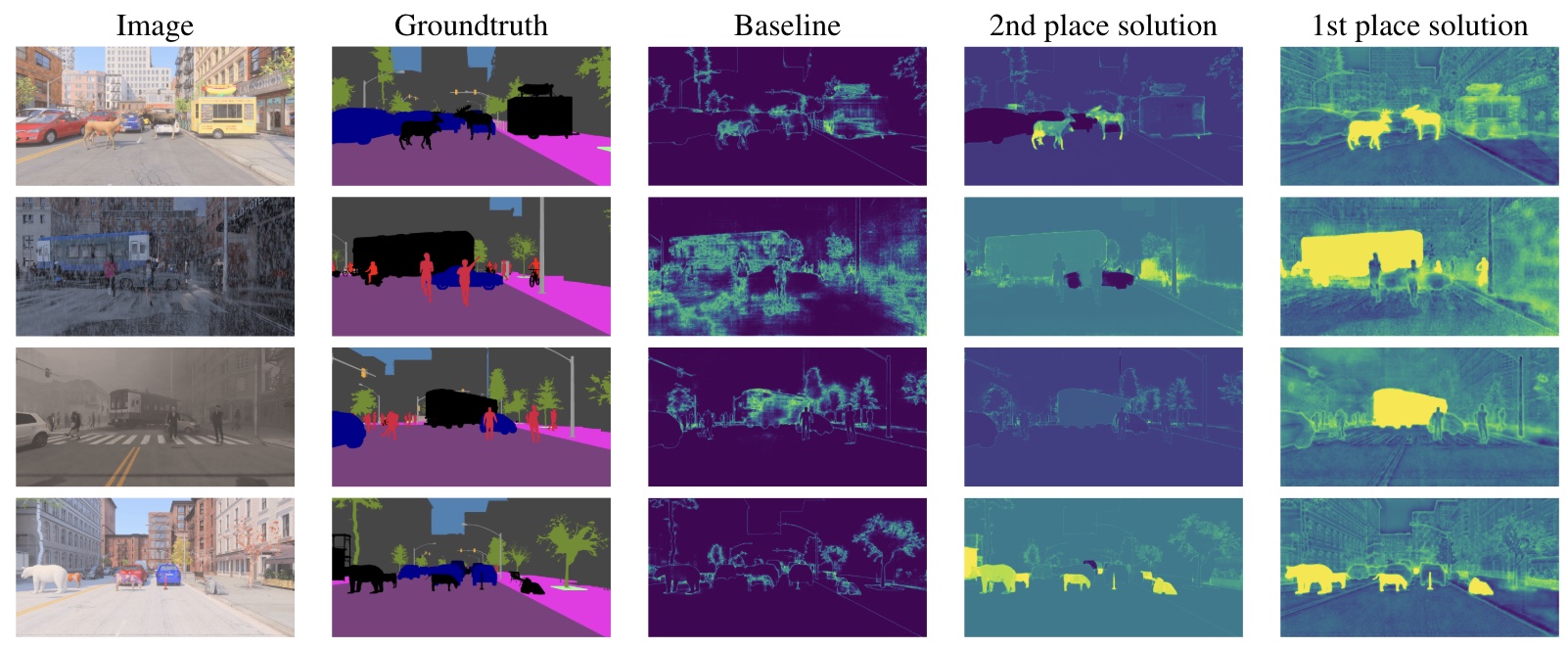

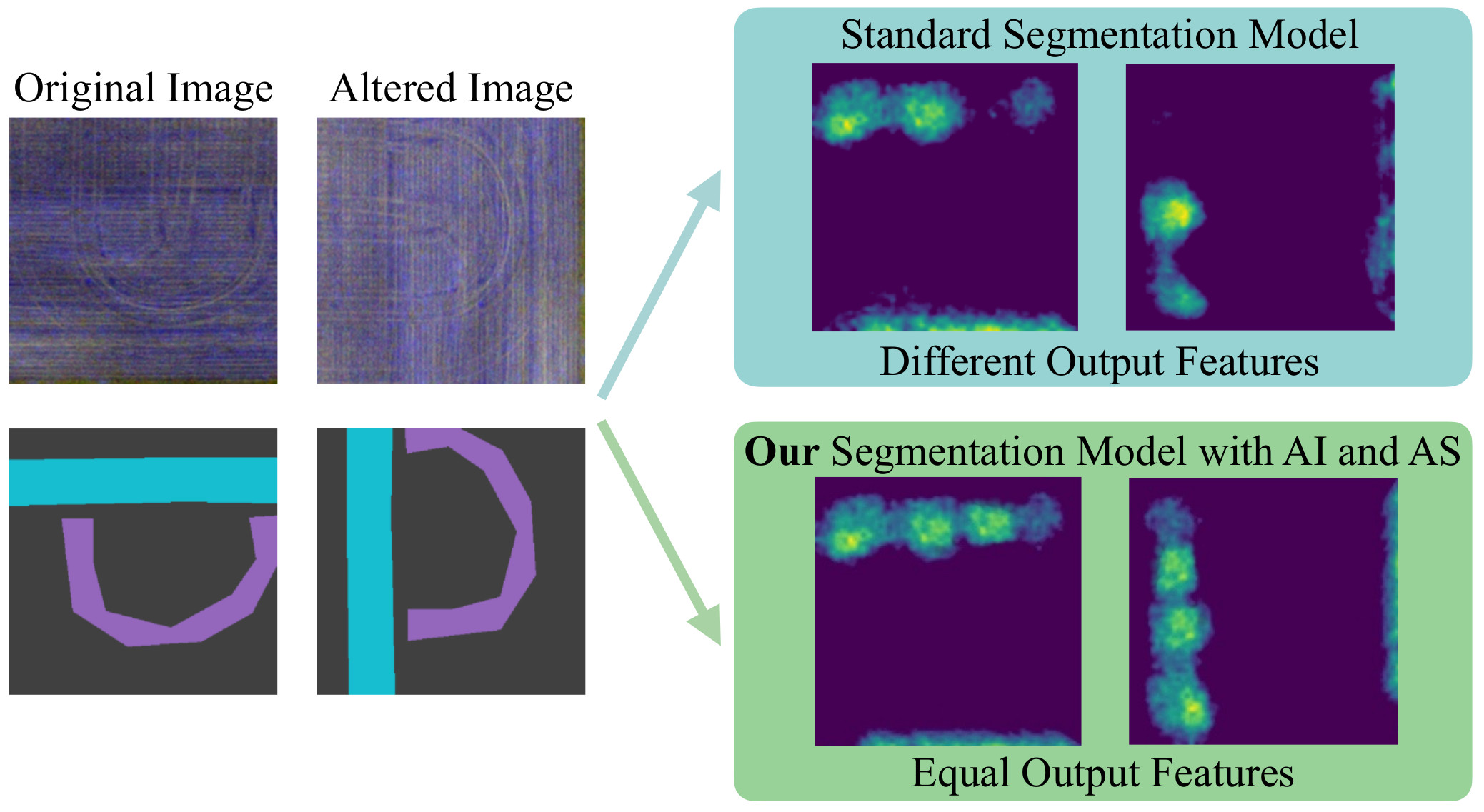

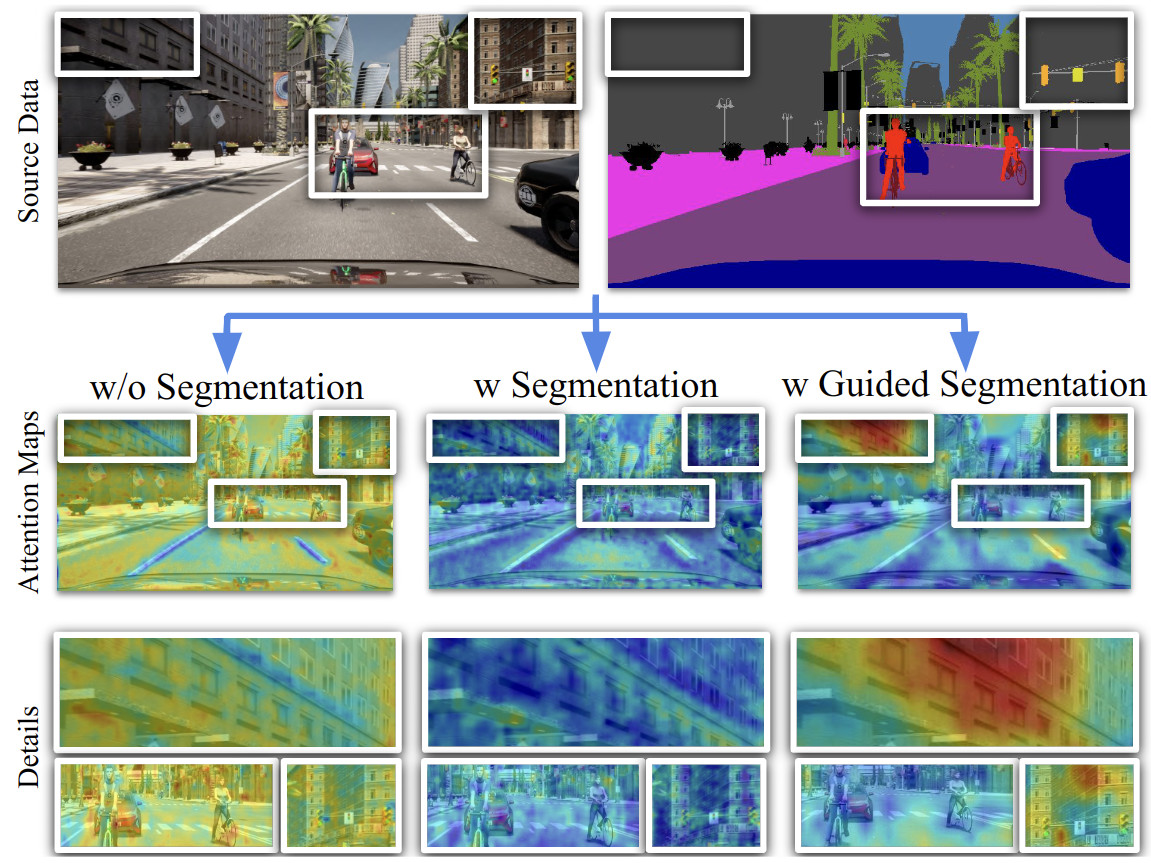

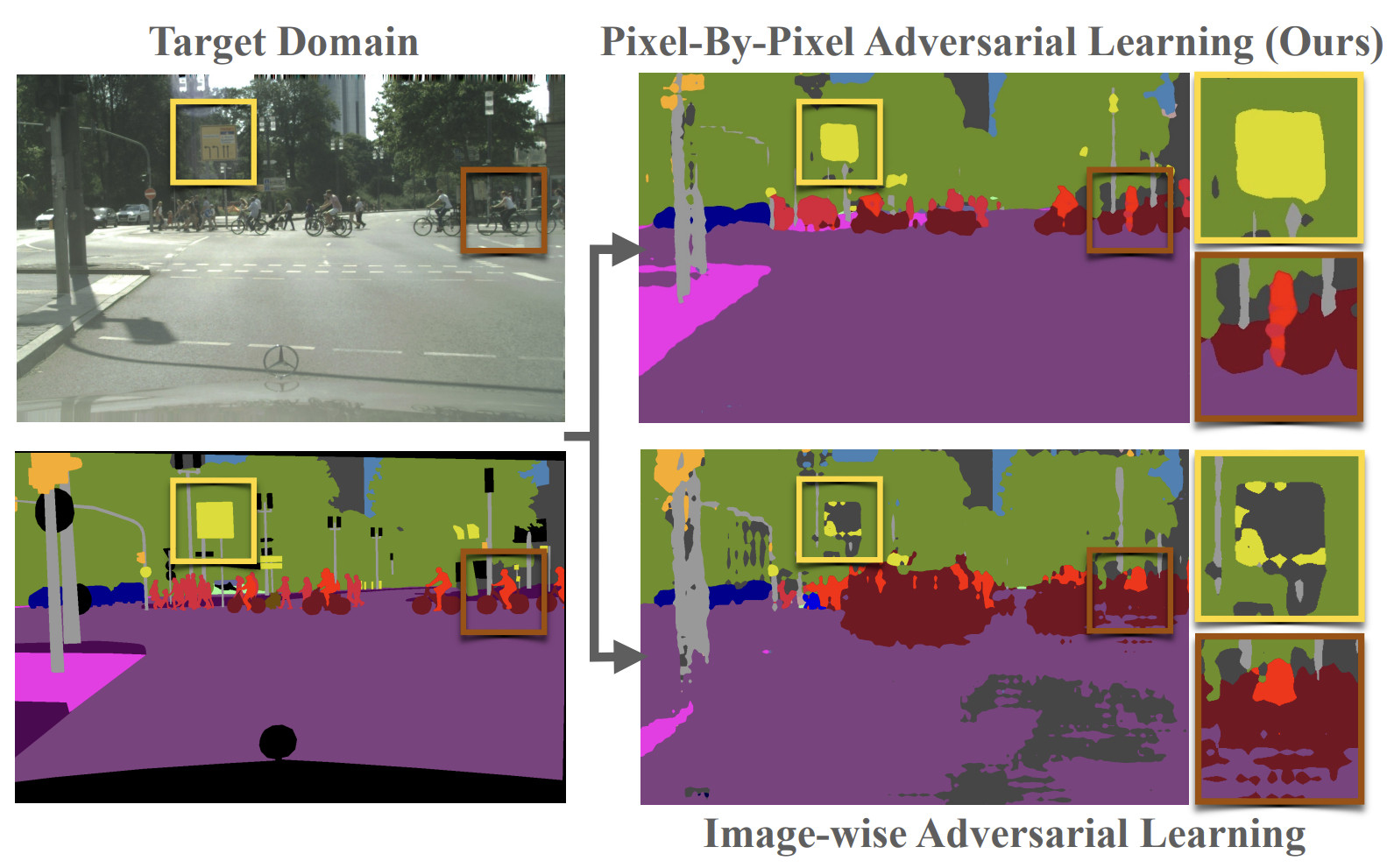

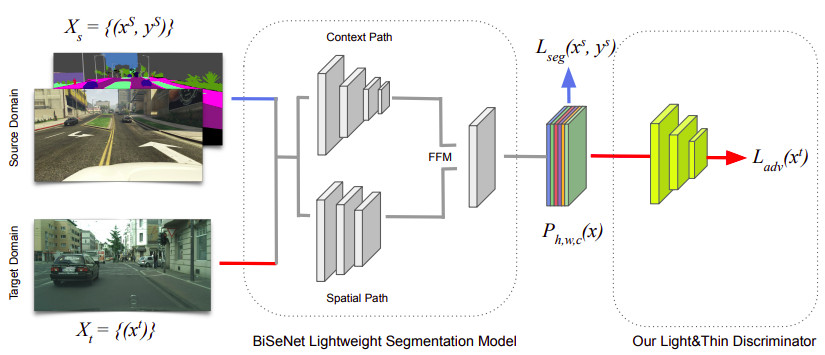

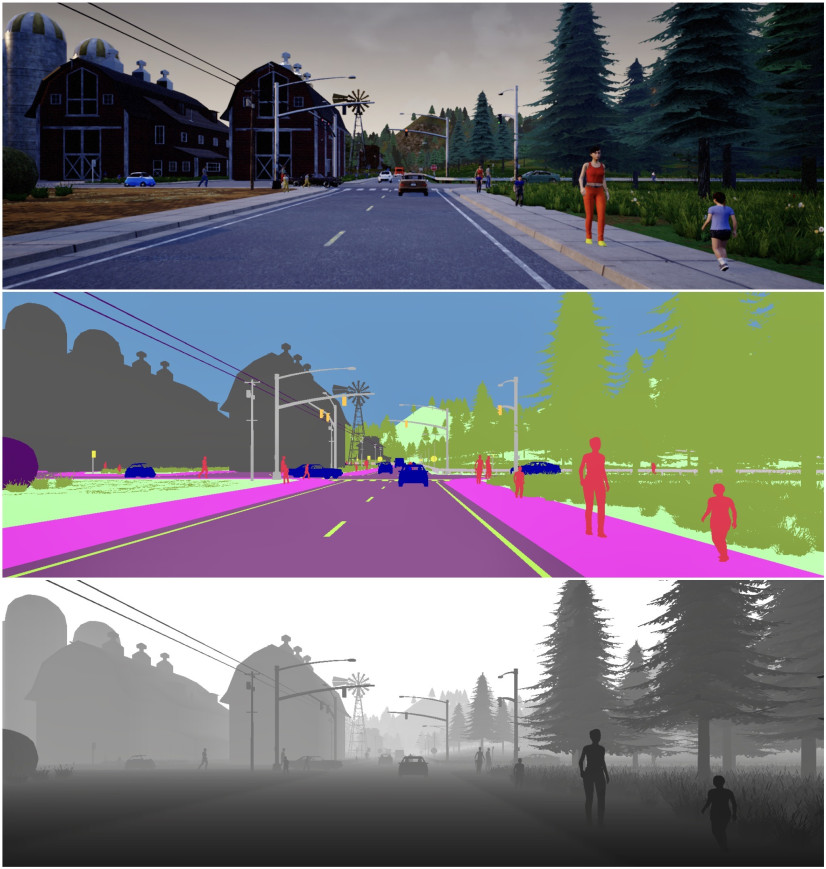

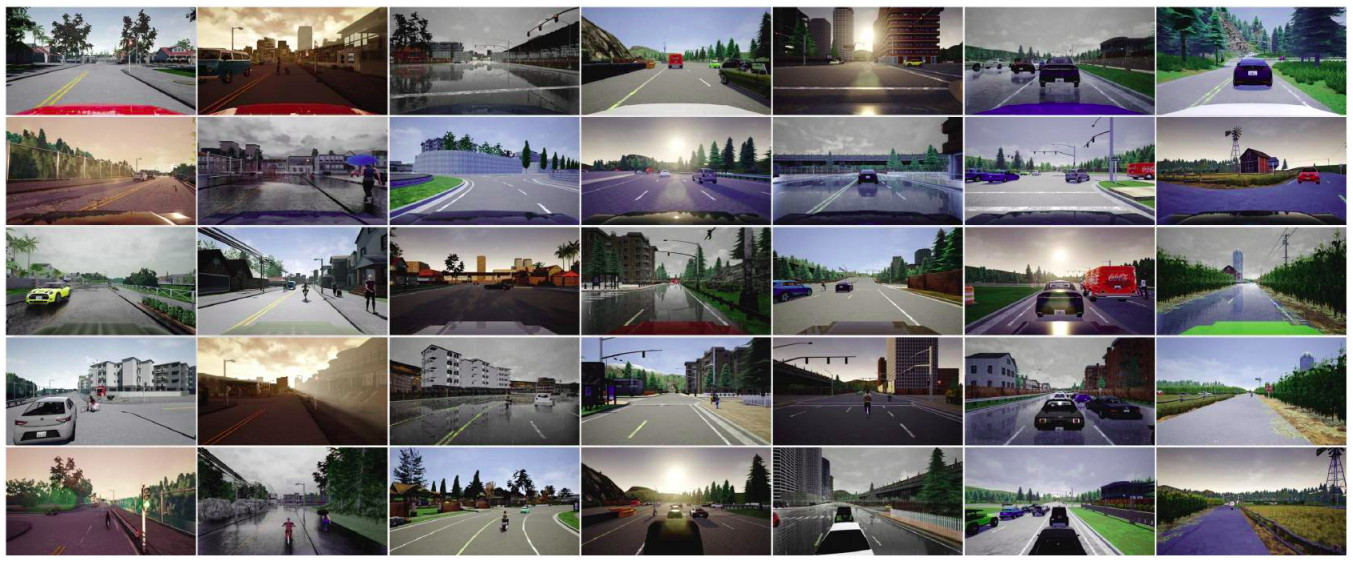

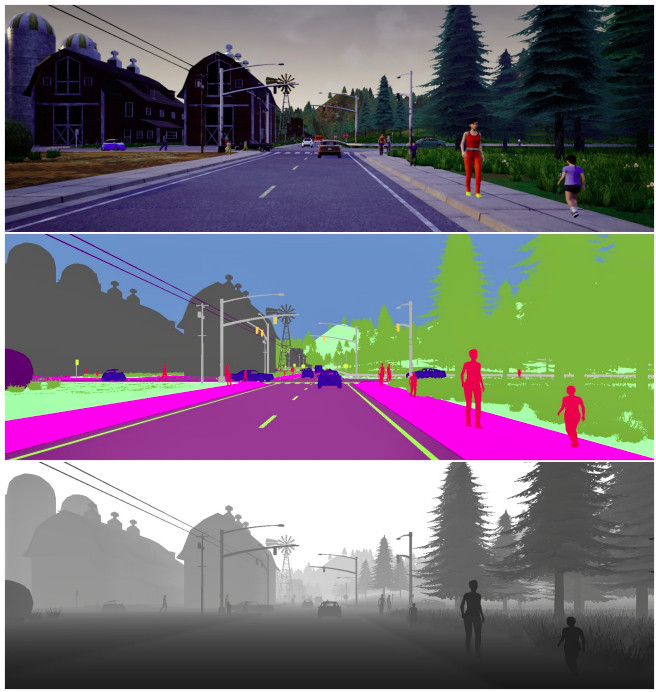

Understanding the fine details in images collected from cameras onboard vehicles is extremely important for the development of the perception stack of a autonomous/assisted car. It is also a challenging task, due to variations in scenery and weather/illumination conditions, the cost of labeling, etcetera. At Vandal we have been working on this topics, developing tools that can help support research, like the IDDA dataset, which contains 105 different scenarios that differentiate for the weather condition, environment and point of view of the camera. We have also developed several algorithms, with a focus on robustness across domains (Pixel-by-Pixel Cross-Domain Alignment for Few-Shot Semantic Segmentation) and capable to handle anomalies (Mask2Anomaly: Mask Transformer for Universal Open-set Segmentation).

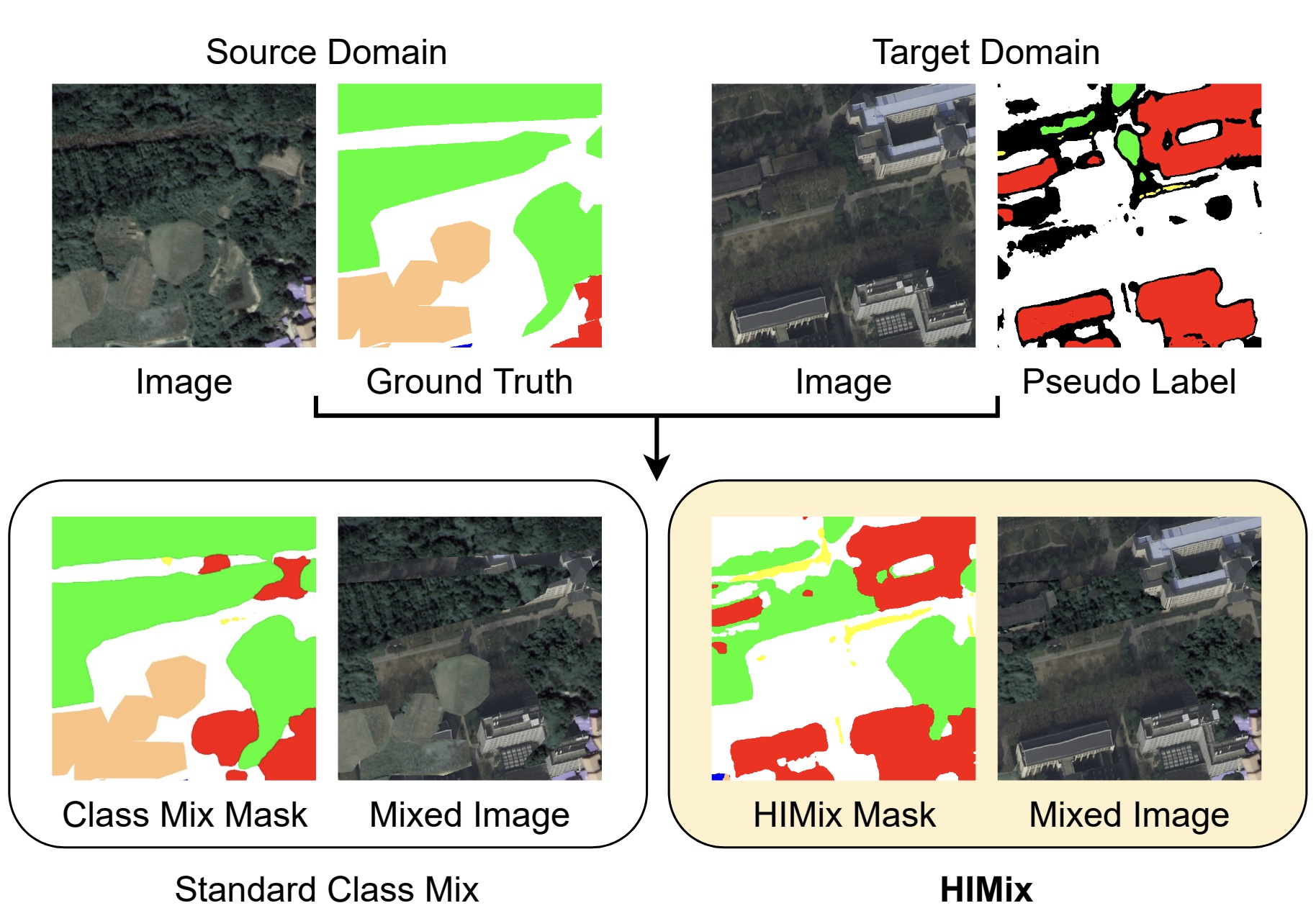

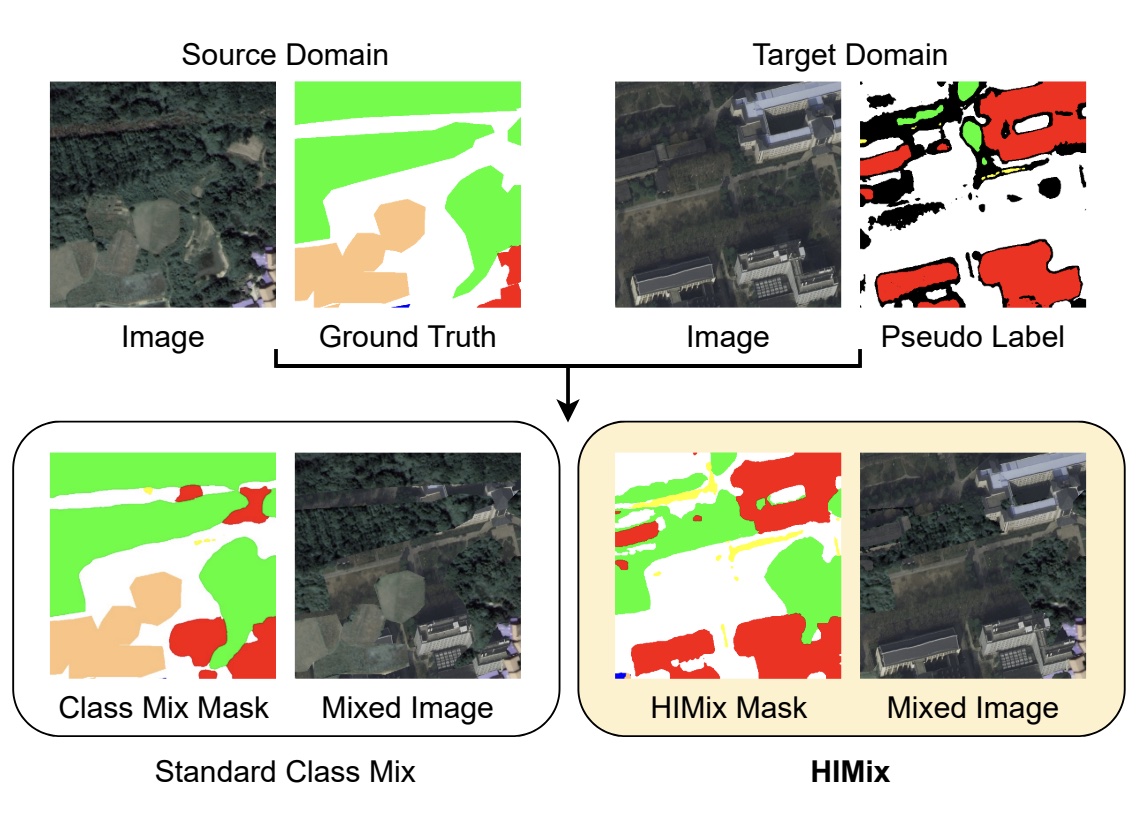

Aerial scenes

In aerial imagery there is a lot of fine detailed information that can be extremely valuable, e.g., to monitor urban development or support environmental monitoring. Unlike in driving scenes, where the street is always at the bottom and the sky on top, in aerial imagery the model cannot rely on a fixed semantic structure of the scene. Moreover, the the relative scale of different visual elements may be extremely different. We have developed solutions that address the domain shift problem in aerial segmentation and are tailored to address the specificities of these scenes.